Computing With Life

A cluster of living neurons grown in a dish has learned to play a video game. This emerging field of biological computing may change how we think about intelligence itself.

A new dawn of scientific advancement has emerged, and it is not where you might think. If I asked you right now, where the majority of scientific advancement is emerging, you might point to the ongoing revolution in artificial intelligence , or perhaps to the breakthroughs in genetic engineering with CRISPR.

However , In the following words I will tell you that another frontier has quietly taken root far from the vast data centres or new chatbot , but in small laboratory dishes with nuerons that are hard to see with the naked eye. These tiny almost insig clusters of brain cells, kept barely alive on electronic chips, are somehow in so way able to learn, adapt, and even play games. What once sounded like science fiction writers wildest dream has now become a striking reality: this is rhetorical direct fusion of biology and technology.

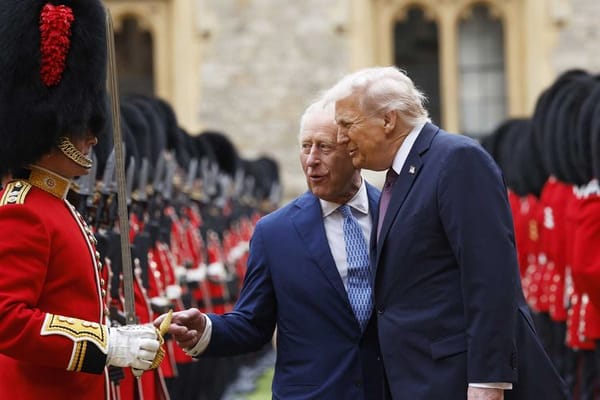

In 2022, a team of researchers in Melbourne created what they called "DishBrain," a network of human and rodent neurons grown on a multi-electrode array. The cells were linked to a simple video game , Pong, and placed in a feedback loop where their electrical activity moved a paddle on the screen. When the neurons fired in patterns that kept the ball in play, they received predictable signals; when they failed, the input became chaotic. Over time, the neurons reorganised themselves to reduce error and improve performance. In other words, a culture of brain cells that have no eyes, hands, or consciousness have somehow learned to play a game once only reserved for humans and computers.

How The Dish Learns to Play

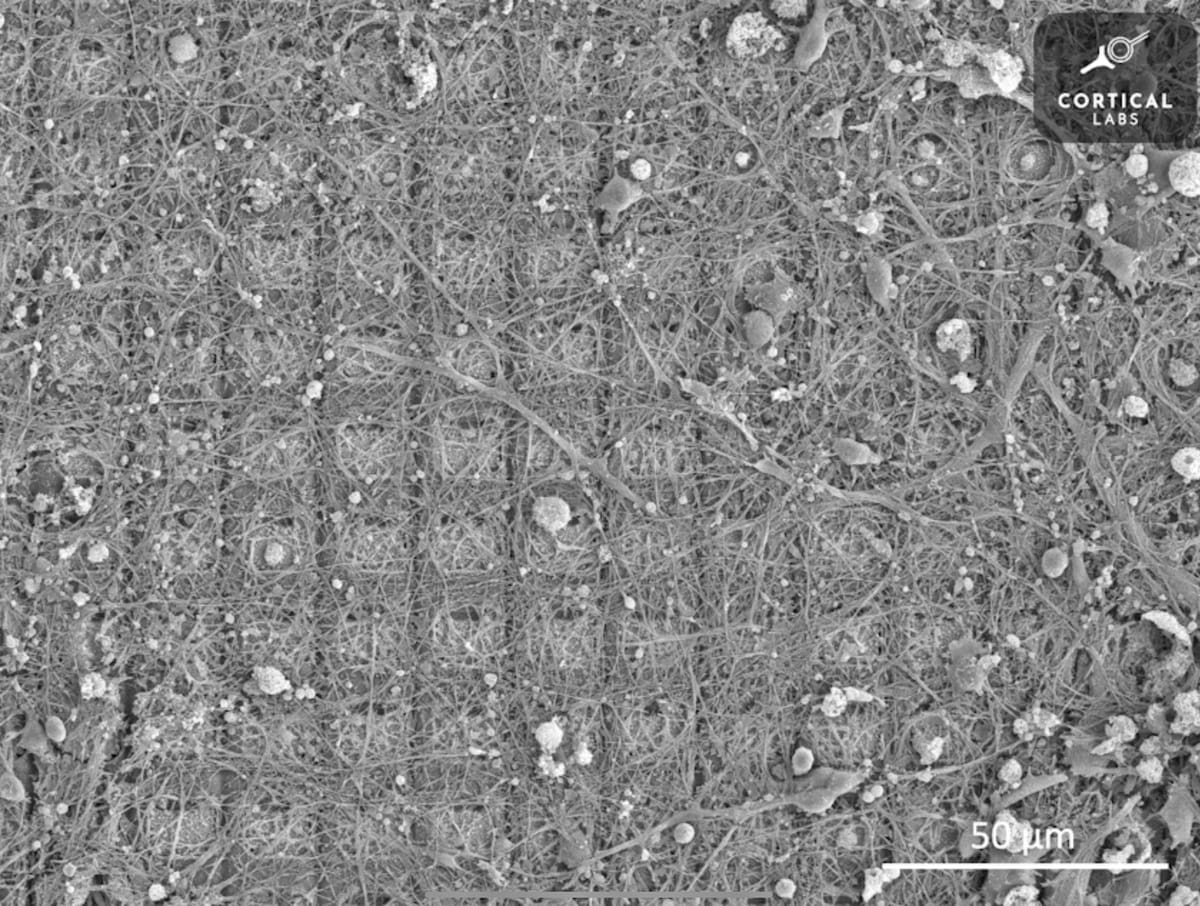

At the heart of the system is a dense grid of electrodes that both "listen" to the neurons and "speak" back to them. The game state is encoded as tiny patterns of electrical stimulation across this grid, so the culture receives a kind of fake sensory scene: the location of the ball , how fast it moves, and whether contact was made. The neurons respond with brief electrical spikes which are short pulses that travel across the network. Software translates certain coordinated spike patterns into paddle movements, closing the loop. Crucially, the feedback is shaped when the

network generates activity that keeps the ball in play, the next inputs become orderly and predictable; when it fails, the inputs become noisy and harsh. Neurons prefer stability. Through synaptic plasticity which is basically the microscopic strengthening and weakening of connections, the culture gradually reorganises to produce the patterns that bring order back. The result is not intelligence in the human sense, but it is unmistakably and somewhat eerily learning.

Why Does This Matter?

In a world fighting for the new ever-better artificial intelligence’s or a slightly better computer chip, living neural networks offer a different perspective entirely: intelligence can be startlingly small, frugal, and startlingly adaptive. Neurons are reorganising themselves in real time, similar to the ones in your brain right now, from sparse feedback rather than millions of labelled examples while consuming orders of magnitude less in power. This is what makes this area of study so intriguing and investing fir for medicine, where live cultures grown from voluntarily given patient cells could help test new drugs, combat neurodegenerative disease, or study how anaesthetics even work.

It also matters for computing. Biological networks hint at learning rules that are predictions , plasticity, stability-seeking—that could inspire new algorithms more efficient than today's power-hungry models. Maybe one day, in hybrid systems, silicon could handle the exact arithmetic while living tissue explores patterns, noise, and a subtle uncertainty with a grace that current chips are struggling or costing large quantities of power to match. For students, this field offers a hands-on way to explore how living networks process information. It suggests that the principles of learning may be simpler, smaller, and more universal than we once thought.

Bibliography:

Kagan, Brett J., et al. "In silico neurons learn to play Pong using structured sensory feedback." Nature, vol. 610, 2022, pp. 103–109. https://doi.org/10.1038/s41586-022-05277-z.

Piradov, Alexander, et al. "Microelectrode Arrays for Recording and Stimulation: A Review." Frontiers in Neuroscience, vol. 16, 2022. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC9147888/.

Liu, Guanlei, and Tommaso Pizzorusso. “Synaptic Plasticity.” StatPearls, StatPearls Publishing, 2023. https://www.ncbi.nlm.nih.gov/books/NBK557811/.

Jinek, Martin, et al. “A Programmable Dual-RNA–Guided DNA Endonuclease in Adaptive Bacterial Immunity.” Science, vol. 337, 2012, pp. 816–821. https://www.nature.com/articles/nmeth.2649.